Self-Supervised Learning

2023

- Shwartz-Ziv, R., & LeCun, Y. (2023). To Compress or Not to Compress–Self-Supervised Learning and Information Theory: A Review. arXiv preprint arXiv:2304.09355.

- RG: The role of information theoretic information bottleneck is unclear in SSL context.

- TP: scrutinize various SSL approaches from an information theoretic perspective, introducing a unified framework that encapsulates SS information-theoretic learning problem

- weave together existing research into a cohesive narrative, delve into contemporary self-supervised methodologies, and spotlight potential research avenues and inherent challenges

- discuss the empirical evaluation of information-theoretic quantities and their estimation methods

- TP furnishes an exhaustive review of the intersection of information theory, self-supervised learning, and deep neural networks.

- Section 5: Optimizing Information in Deep Neural Networks: Challenges and Approaches

- Measuring the information in High dimensional spaces.

- Balestriero, R., Ibrahim, M., Sobal, V., Morcos, A., Shekhar, S., Goldstein, T., … & Goldblum, M. (2023). A cookbook of self-supervised learning. arXiv preprint arXiv:2304.12210.

- Origin of SSL:

- information restoration: masked image prediction, colorization,

- Temporal relationship in the video:

- Learning spatial context: RotNet, Jigsaw

- Grouping similar image together: k-mean clustering, mean-shift, optimal-transport

- Generative Model: autoencoder, RBM, GAN

- Multi-view invariance: modern methods.

- Deep metric learning family: SimCLR/NNCLR/Meanshift/SCL

- self-distillation family: BYOL/SimSIAM/DINO

- Canonical correlation analysis: VICReg/BarlowTwins/SWAV/W-MSE

- Origin of SSL:

- Cabannes, V., Bottou, L., Lecun, Y., & Balestriero, R. (2023). Active Self-Supervised Learning: A Few Low-Cost Relationships Are All You Need. arXiv preprint arXiv:2303.15256.

- generalize and formalize this principle through Positive Active Learning (PAL) where an oracle queries semantic relationships between samples

- unveils a theoretical learning framework beyond SSL, that can be extended to tackle supervised and semi-supervised depending on oracle

- provides algorithm to embed a priori knowledge, e.g. some observed labels, into any SSL losses without any change in the training.

- provides an active learning framework to bridge the gap between theory and practice of AL (!), based on simple-to-answer-by-nonexperts queries of semantic relationships between inputs.

- Research gap in SSL: combine the label information or any priori knowledge?

- TP: redefine existing SSL in terms of a similarity graph – nodes represent data samples and edges reflect known inter-sample relationships

- think about learning in terms of similarity graph: yields a spectrum on which SSL and supervised learning can be seen as two extremes

- TP: use a similarity graph to define the SSL and supervised training losses to reduce cost and expert requirement of active learning

- Active learning in SSL: what are similar and what not?

- strategize Positive Active Learning (PAL), and present some key analysis on the benefits of PAL over traditional active learning

- GEM paper of k-partitioned graphs

- supervised setting: C partitioned (C disconnected components)

- direct observation that VICReg is akin to Laplacian Eigenmaps or multidimensional scaling, SimCLR is akin to Cross-entropy and BarlowTwins is akin to Canonical Correlation Analysis [theorem 1]

- generalize and formalize this principle through Positive Active Learning (PAL) where an oracle queries semantic relationships between samples

-

Shwartz-Ziv, R., Balestriero, R., Kawaguchi, K., Rudner, T. G., & LeCun, Y. (2023). An Information-Theoretic Perspective on Variance-Invariance-Covariance Regularization. arXiv preprint arXiv:2303.00633.

-

Same paper of what do we maximize in SSL (paper from 2022)

- demonstrate how information-theoretic quantities can be obtained for deterministic networks as an alternative to the commonly used unrealistic stochastic networks assumption.

- relate the VICReg objective to mutual information maximization and use it to highlight the underlying assumptions of the objective.

- derive a generalization bound for VICReg, providing generalization guarantees for downstream tasks and present new SSL

-

2015

-

Dosovitskiy, Alexey, Philipp Fischer, Jost Tobias Springenberg, Martin Riedmiller, and Thomas Brox. “Discriminative unsupervised feature learning with exemplar convolutional neural networks.” IEEE transactions on pattern analysis and machine intelligence 38, no. 9 (2015): 1734-1747.

-

Doersch, Carl, Abhinav Gupta, and Alexei A. Efros. “Unsupervised visual representation learning by context prediction.” In Proceedings of the IEEE international conference on computer vision, pp. 1422-1430. 2015.

-

Crop position learning pretext!

-

Figure 2 (problem formulation) and 3 (architectures) shows the key contribution

-

-

Tishby, Naftali, and Noga Zaslavsky. “Deep learning and the information bottleneck principle.” In 2015 IEEE Information Theory Workshop (ITW), pp. 1-5. IEEE, 2015.

-

Koch, Gregory, Richard Zemel, and Ruslan Salakhutdinov. “Siamese neural networks for one-shot image recognition.” In ICML deep learning workshop, vol. 2. 2015.

- Original contrastive approach with two (either similar or dissimilarity) images [algorithmic]

-

Wang, Xiaolong, and Abhinav Gupta. “Unsupervised learning of visual representations using videos.” In Proceedings of the IEEE international conference on computer vision, pp. 2794-2802. 2015.

-

Visual tracking provides the supervision!!! [sampling method for CL]

-

Siamese-triplet networks: energy based max-margin loss

-

Experiments: VOC 2012 dataset (100k Unlabeled videos)

-

interesting loss functions (self note: please update the pdf files)

-

2016

-

Xie, Junyuan, Ross Girshick, and Ali Farhadi. “Unsupervised deep embedding for clustering analysis.” In International conference on machine learning, pp. 478-487. PMLR, 2016.

-

Deep Embedded Clustering (DEC) Learns (i) Feature representation (ii) cluster assignments

-

Experiment: Image and text corpora

-

Contribution: (a) joint optimization of deep embedding and clustering; (b) a novel iterative refinement via soft assignment (??); (c) state-of-the-art clustering results in terms of clustering accuracy and speed

-

target distribution properties: (1) strengthen predictions (i.e., improve cluster purity), (2) put more emphasis on data points assigned with high confidence, and (3) normalize loss contribution of each centroid to prevent large clusters from distorting the hidden feature space.

-

Computational complexity as iteration over large data samples

-

Assumptions and objectives: The underlying assumption of DEC is that the initial classifier’s high confidence predictions are mostly correct

-

Two metrics: NMI (normalized MI), and Generalizablity: L_tr / L_val

-

-

Joulin, Armand, Laurens Van Der Maaten, Allan Jabri, and Nicolas Vasilache. “Learning visual features from large weakly supervised data.” In European Conference on Computer Vision, pp. 67-84. Springer, Cham, 2016.

-

Sohn, Kihyuk. “Improved deep metric learning with multi-class n-pair loss objective.” In Proceedings of the 30th International Conference on Neural Information Processing Systems, pp. 1857-1865. 2016.

- Deep metric learning (solves the slow convergence for the contrastive and triple loss)

- what is the penalty??

-

How they compared the convergences

- This paper: Multi-class N-pair loss

- developed in two steps (i) Generalization of triplet loss (ii) reduces computational complexity by efficient batch construction (figrue 2) taking (N+1)xN examples!!

- This paper: Multi-class N-pair loss

-

Experiments on visual recognition, object recognition, and verification, image clustering and retrieval, face verification and identification tasks.

-

identify multiple negatives [section 3], efficient batch construction

- Deep metric learning (solves the slow convergence for the contrastive and triple loss)

-

Noroozi, Mehdi, and Paolo Favaro. “Unsupervised learning of visual representations by solving jigsaw puzzles.” In European conference on computer vision, pp. 69-84. Springer, Cham, 2016.

- Pretext tasks (solving jigsaw puzzle) - self-supervised

-

Misra, Ishan, C. Lawrence Zitnick, and Martial Hebert. “Shuffle and learn: unsupervised learning using temporal order verification.” In European Conference on Computer Vision, pp. 527-544. Springer, Cham, 2016.

- Pretext Task: a sequence of frames from a video is in the correct temporal order (figure 1) [sampling method for CL]

- Capture temporary variations

- Fusion and classification [not the CL directly]

-

experiment Net: CNN Based network, data: UCF101, HMDB51 & FLIC, MPII (pose Estimation)

- self note: There’s more.

- Pretext Task: a sequence of frames from a video is in the correct temporal order (figure 1) [sampling method for CL]

-

Oh Song, Hyun, Yu Xiang, Stefanie Jegelka, and Silvio Savarese. “Deep metric learning via lifted structured feature embedding.” In Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 4004-4012. 2016.

- Proposes a different loss function (Equation-3)

- Non-smooth and requires special data mining

- Solution: This paper: optimize upper bound of eq3, instead of mining use stochastic approach!!

- This paper: all pairwise combination in a batch!! O(m2)

- uses mini batch

- Not-random batch formation: Importance Sampling

- Hard-negative mining

-

Gradient finding in the algorithm-1, mathematical analysis

- Discusses one of the fundamental issues with contrastive loss and triplet loss!

- different batches puts same class in different position

-

Experiment: Amazon Object dataset- multiview .

- Proposes a different loss function (Equation-3)

2017

-

Qi, C., & Su, F. (2017, September). Contrastive-center loss for deep neural networks. In 2017 IEEE international conference on image processing (ICIP) (pp. 2851-2855). IEEE.

-

Randomized center selection, class assignment, and constrastive learning to enforce closeness between similar classes.

-

Eq 2 [loss function]

-

-

Santoro, Adam, David Raposo, David GT Barrett, Mateusz Malinowski, Razvan Pascanu, Peter Battaglia, and Timothy Lillicrap. “A simple neural network module for relational reasoning.” arXiv preprint arXiv:1706.01427 (2017).

-

Zhang, Richard, Phillip Isola, and Alexei A. Efros. “Split-brain autoencoders: Unsupervised learning by cross-channel prediction.” In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1058-1067. 2017.

- Extension of autoencoders to cross channel prediction [algorithmic]

- Predict one portion to other and vice versa + loss on full reconstruction.

- Two disjoint auto-encoders.

- Tried both the regression and classification loss

- Section 3 sums it up

- Cross-channel encoders

- Split-brain autoencoders.

- Cross-channel encoders

- Section 3 sums it up

- Extension of autoencoders to cross channel prediction [algorithmic]

-

Fernando, Basura, Hakan Bilen, Efstratios Gavves, and Stephen Gould. “Self-supervised video representation learning with odd-one-out networks.” In Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 3636-3645. 2017.

- Pretext tasks: Finding the odd-one (O3N) video using fusion. [sampling method for CL]

- Temporal odd one! Target: Regression task

-

Network: CNN with fusion methods.

- Experiments: HMDB51, UCF101

- Pretext tasks: Finding the odd-one (O3N) video using fusion. [sampling method for CL]

-

Denton, Emily L. “Unsupervised learning of disentangled representations from video.” In Advances in neural information processing systems, pp. 4414-4423. 2017.

-

Encoder-Decoder set up for the disentangled [disentanglement representation]

-

Hypothesis: Content (time invariant) and Pose (time variant)

-

Two Encoders for the pose and content; Concatenate the output for single Decoder

-

Introduce adversarial loss

-

Video generation conditioned on context, and pose modeling via LSTM.

-

2018

-

Károly, A. I., Fullér, R., & Galambos, P. (2018). Unsupervised clustering for deep learning: A tutorial survey. Acta Polytechnica Hungarica, 15(8), 29-53.

- NN: Self-organizing maps, Kohonen maps, Adaptive resonance theory, Autoencoders, Co-localization, Generative Models.

-

Aljalbout, Elie, Vladimir Golkov, Yawar Siddiqui, Maximilian Strobel, and Daniel Cremers. “Clustering with deep learning: Taxonomy and new methods.” arXiv preprint arXiv:1801.07648 (2018).

-

Three components: Main encoder networks (concerns with architecture, Losses, and cluster assignments)

-

Non-cluster loss: Autoencoder reconstruction losses

-

Various types of clustering loss (note)

-

Combine losses: Pretraining / jointly training / variable scheduleing

-

Cluster update: Jointly update with the network model / Alternating update with network models

-

Relevant methods: Deep Embedded Clustering (Xie et al, 2016), Deep Clustering Network (yang et al, 2016), Discriminatively Boosted Clustering (Li et al, 2017), ..

-

-

Belghazi, Mohamed Ishmael, Aristide Baratin, Sai Rajeshwar, Sherjil Ozair, Yoshua Bengio, Aaron Courville, and Devon Hjelm. “Mutual information neural estimation.” In International Conference on Machine Learning, pp. 531-540. 2018.

- present a Mutual Information Neural Estimator (MINE): linearly scalable with dimensionality and sample size, trainable through back-prop

- improve adversarially trained generative models and implement the Information Bottleneck, applying it to supervised classification

- Algorithm 1: Key (careful about the bar)

- present a Mutual Information Neural Estimator (MINE): linearly scalable with dimensionality and sample size, trainable through back-prop

-

Hjelm, R. Devon, Alex Fedorov, Samuel Lavoie-Marchildon, Karan Grewal, Phil Bachman, Adam Trischler, and Yoshua Bengio. “Learning deep representations by mutual information estimation and maximization.” arXiv preprint arXiv:1808.06670 (2018).

- locality of input knowledge and match prior distribution adversarially (DeepInfoMax)

-

Maximize input and output MI

- Experimented on Images

- Compared with VAE, BiGAN, CPC

- Experimented on Images

-

- Evaluate represenation by Neural Dependency Measures (NDM)

-

Global features (Anchor, Query) and Local features of the query (+), local feature map of random images

- locality of input knowledge and match prior distribution adversarially (DeepInfoMax)

-

Wu, Zhirong, Alexei A. Efros, and Stella X. Yu. “Improving generalization via scalable neighborhood component analysis.” In Proceedings of the European Conference on Computer Vision (ECCV), pp. 685-701. 2018.

-

SCNA (Modified version of the NCA approach)

-

Aim to reduce the computation complexity of the NCA by taking two assumptions

- Partial gradient update making the mini-batch gradient update possible - TP: Device a mechanism called augmented memory for the generalization. (a version of momentum update!) -

-

Caron, Mathilde, Piotr Bojanowski, Armand Joulin, and Matthijs Douze. “Deep clustering for unsupervised learning of visual features.” In Proceedings of the European Conference on Computer Vision (ECCV), pp. 132-149. 2018.

- Cluster Deep features and make them pseudo labels. [fig 1]

- Cluster (k-means) for training CNN [Avoid trivial solution of all zeros!]

- Motivation from Unsupervised feature learning, self-supervised learning, generative model

- More

-

Oord, Aaron van den, Yazhe Li, and Oriol Vinyals. “Representation learning with contrastive predictive coding.” arXiv preprint arXiv:1807.03748 (2018).

- Predicting the future [self-supervised task design]

- derive the concept of context vector (from earlier representation)

- use the context vector for future representation prediction.

- derive the concept of context vector (from earlier representation)

- TP: Great works with some foundation of CL

- probabilistic (AR) contrastive loss!!

- in latent space

- probabilistic (AR) contrastive loss!!

- Experiments on the speech, image, text and RL

- CPC (3 things) - Aka- InfoNCE (coining the term)

- compression, autoregressive and NCE

- CPC (3 things) - Aka- InfoNCE (coining the term)

-

Energy based like setup

-

Figure 4: about what they did!

-

- Predicting the future [self-supervised task design]

-

Wu, Zhirong, Yuanjun Xiong, Stella X. Yu, and Dahua Lin. “Unsupervised feature learning via non-parametric instance discrimination.” In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3733-3742. 2018.

-

non-parametric classifier via feature representation (Memory Bank)

- Memory bank stores instance features (used for kNN classifier)

- Dimention reduction: one of the key [algorithmic] contribution

- Experiments

- obj detect and image classification

- connect to

- selfsupervised learning (related works) and Metric learning (unsupervised fashion)

- NCE (to tackle class numbers) - [great idea, just contrast with everything else in E we get the classifier]

- instance-level discrimination, non-parametric classifier.

- compared with known example (non-param.)

- interesting setup section 3

- representation -> class (image itself) (compare with instance) -> loss function (plays the key role to distinguish)

- NCE from memory bank

- Monte carlo sampling to get the all contrastive normalizing value for denominator

- proximal parameter to ensure the smoothness for the representations {proximal regularization:}

- Memory bank stores instance features (used for kNN classifier)

-

-

Sermanet, Pierre, Corey Lynch, Yevgen Chebotar, Jasmine Hsu, Eric Jang, Stefan Schaal, Sergey Levine, and Google Brain. “Time-contrastive networks: Self-supervised learning from video.” In 2018 IEEE International Conference on Robotics and Automation (ICRA), pp. 1134-1141. IEEE, 2018.

- Multiple view point [same times are same, different time frames are different], motion blur, viewpoint invariant

- Regardless of the viewpoint [same time same thing , same representation]

- Considered images [sampling method for CL]

- Representation is the reward

- TCN - a embedding {multitask embedding!}

-

imitation learning

-

PILQR for RL parts

- Huber-style loss

- Multiple view point [same times are same, different time frames are different], motion blur, viewpoint invariant

2019

-

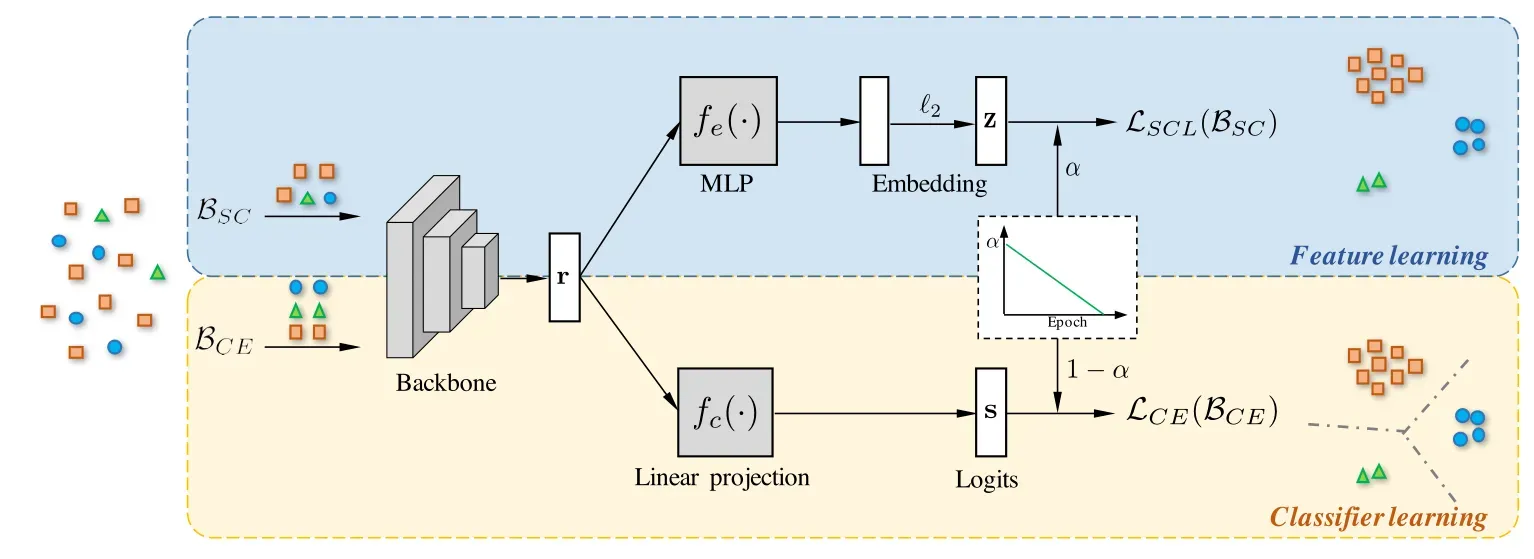

Kang, B., Xie, S., Rohrbach, M., Yan, Z., Gordo, A., Feng, J., & Kalantidis, Y. (2019). Decoupling representation and classifier for long-tailed recognition. arXiv preprint arXiv:1910.09217.

- decouple the learning procedure into representation learning and classification, and systematically explore how different balancing strategies affect them for long-tailed recognition

- Findings (1) data imbalance might not be an issue in learning high-quality representations; (2) with representations learned with the simplest instance-balanced (natural) sampling, achieve strong long-tailed recognition ability by adjusting only the classifier.

- common long-tailed benchmarks like ImageNet-LT, Places-LT and iNaturalist (achieve sota with decoupling representation and classifier)

- Approach: first train models to learn representations with different sampling strategies (standard instance-based sampling, class-balanced sampling and their mixture). Next, we study three different basic approaches to obtain a classifier with balanced decision boundaries, on top of the learned representations. (re-training the parametric linear classifier in a class-balancing manner (i.e., re-sampling); non-parametric nearest class mean classifier, normalizing the classifier weights, which adjusts the weight magnitude directly to be more balanced by adding a temperature to modulate the normalization procedure.

- decouple the learning procedure into representation learning and classification, and systematically explore how different balancing strategies affect them for long-tailed recognition

-

Wu, J., Long, K., Wang, F., Qian, C., Li, C., Lin, Z., & Zha, H. (2019). Deep comprehensive correlation mining for image clustering. In Proceedings of the IEEE/CVF International Conference on Computer Vision (pp. 8150-8159).

- TP DCCM

- incorporate other (three??) useful correlation! (as traditional approaches only utilizes the sample correlation)

- Instead of pairwise, pseudo-label supervision is proposed to investigate category information and learn discriminative features.

- features’ robustness to image transformation of input space is fully (!) explored- consistency loss!!

- The triplet mutual information among features is presented for clustering problem to lift the recently discovered instance-level deep MI to a triplet-level formation

- Correlation among different feature layers! (My idea: sharpening)

- Taxonomy:

- Pseudo-graph supervision: Pairwise matching (contrastive loss)

- Pseudo-label supervision: k-label partition and supervision (e.g. sinkhorn-knopp, knn)

-

Dwibedi, Debidatta, Yusuf Aytar, Jonathan Tompson, Pierre Sermanet, and Andrew Zisserman. “Temporal cycle-consistency learning.” In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 1801-1810. 2019.

- Temporal video alignment problem: the task of finding correspondences across multiple videos despite many factors of variation

- TP: Temporal cycle consistency losses (complementary to other methods [TCN, shuffle and learn])

- Dataset: Penn AR, Pouring dataset

- TP: Focus on temporal reasoning!! (metrics)

- learns representations by aligning video sequences of the same action

- Requires differentiable cycle-consistency losses

- TP: Focus on temporal reasoning!! (metrics)

-

- Figure 2: interesting but little hard to understand

-

Li, Xueting, Sifei Liu, Shalini De Mello, Xiaolong Wang, Jan Kautz, and Ming-Hsuan Yang. “Joint-task self-supervised learning for temporal correspondence.” arXiv preprint arXiv:1909.11895 (2019).

-

Xie, Qizhe, Zihang Dai, Eduard Hovy, Minh-Thang Luong, and Quoc V. Le. “Unsupervised data augmentation for consistency training.” arXiv preprint arXiv:1904.12848 (2019).

- TP: how to effectively noise unlabeled examples (1) and importance of advanced data augmentation (2)

- Investigate the role of noise injection and advanced data augmentation

- Proposes better data augmentation in consistency training: Unsupervised Data Augmentation (UDA)

- Experiments with vision and language tasks

-

Bunch of experiment with six language tasks and three vision tasks.

-

Consistency training as regularization.

-

UDA: Augment unlabeled data!! and quality of the noise for augmentations.

-

Noise types: Valid noise, Diverse noise, and Targeted Inductive biases

-

Augmentation types: RandAugment for image, backtranslating the language

-

Training techniques: confidence based masking, Sharpening Predictions, Domain relevance data filtering.

-

Interesting graph comparison under three assumption.

- TP: how to effectively noise unlabeled examples (1) and importance of advanced data augmentation (2)

-

Anand, Ankesh, Evan Racah, Sherjil Ozair, Yoshua Bengio, Marc-Alexandre Côté, and R. Devon Hjelm. “Unsupervised state representation learning in atari.” arXiv preprint arXiv:1906.08226 (2019).

-

Alwassel, Humam, Dhruv Mahajan, Bruno Korbar, Lorenzo Torresani, Bernard Ghanem, and Du Tran. “Self-supervised learning by cross-modal audio-video clustering.” arXiv preprint arXiv:1911.12667 (2019).

-

Sun, Chen, Fabien Baradel, Kevin Murphy, and Cordelia Schmid. “Learning video representations using contrastive bidirectional transformer.” arXiv preprint arXiv:1906.05743 (2019).

-

Han, Tengda, Weidi Xie, and Andrew Zisserman. “Video representation learning by dense predictive coding.” In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, pp. 0-0. 2019.

- Self-supervised AR (DPC)

- Learn dense coding of spatio-temporal blocks by predicting future frames (decrease with time!)

- Training scheme for future prediction (using less temporal data)

-

Care for both temporal and spatial negatives.

-

Look at their case for - the easy negatives (patches encoded from different videos), the spatial negatives (same video but at different spatial locations), and the hard negatives (TCN)

-

performance evaluated by Downstream tasks (Kinetics-400 dataset (pretrain), UCF101, HMDB51- AR tasks)

- Section 3.1 and 3.2 are core (contrastive equation - 5)

- Self-supervised AR (DPC)

-

Ye, Mang, Xu Zhang, Pong C. Yuen, and Shih-Fu Chang. “Unsupervised embedding learning via invariant and spreading instance feature.” In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 6210-6219. 2019.

- Contrastive idea but uses siamese network.

-

Bachman, Philip, R. Devon Hjelm, and William Buchwalter. “Learning representations by maximizing mutual information across views.” arXiv preprint arXiv:1906.00910 (2019).

- Multiple view of shared context - Why MI (analogous to human representation, How??) - same understanding regardless of view - Whats the problem with others !!- Experimented with Imagenet

- Extension of local DIM in 3 ways (this paper calls it - augmented multi-scale DIM (AMDIM))

- Predicts features for independently-augmented views

- predicts features across multiple views

- Uses more powerful encoder

- Extension of local DIM in 3 ways (this paper calls it - augmented multi-scale DIM (AMDIM))

- Methods relevance: Local DIM, NCE, Efficient NCE computation, Data Augmentation, Multi-scale MI, Encoder, mixture based representation

- Experimented with Imagenet

-

Goyal, Priya, Dhruv Mahajan, Abhinav Gupta, and Ishan Misra. “Scaling and benchmarking self-supervised visual representation learning.” In Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 6391-6400. 2019.

-

Chen, Xinlei, Haoqi Fan, Ross Girshick, and Kaiming He. “Improved baselines with momentum contrastive learning.” arXiv preprint arXiv:2003.04297 (2020).

-

Tian, Yonglong, Dilip Krishnan, and Phillip Isola. “Contrastive multiview coding.” arXiv preprint arXiv:1906.05849 (2019).

-

Find the invariant representation

- Multiple view of objects (image) (CMC) - multisensor view or same object!!]

- Same object but different sensors (positive keys)

- Different object same sensors (negative keys)

- Experiment: ImageNEt, STL-10, two views, DIV2K cropped images

-

Positive pairs (augmentation)

-

Follow-up of Contrastive Predictive coding (no RNN but more generalized)

-

Compared with baseline: cross-view prediction!!

- Interesting math: Section 3 and experiment 4

- Mutual information lower bound Log(k = negative samples)- Lcontrastive

- Memory bank implementation

- Mutual information lower bound Log(k = negative samples)- Lcontrastive

-

-

Zhuang, Chengxu, Alex Lin Zhai, and Daniel Yamins. “Local aggregation for unsupervised learning of visual embeddings.” In Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 6002-6012. 2019.

-

Tschannen, Michael, Josip Djolonga, Paul K. Rubenstein, Sylvain Gelly, and Mario Lucic. “On mutual information maximization for representation learning.” arXiv preprint arXiv:1907.13625 (2019).

-

Löwe, Sindy, Peter O’Connor, and Bastiaan S. Veeling. “Putting an end to end-to-end: Gradient-isolated learning of representations.” arXiv preprint arXiv:1905.11786 (2019).

-

Tian, Yonglong, Dilip Krishnan, and Phillip Isola. “Contrastive representation distillation.” arXiv preprint arXiv:1910.10699 (2019).

-

Missed structural knowledge of the teacher network!!

- Cross modal distillation!!

- KD -all dimension are independent (intro 1.2)

- Capture the correlation/higher-order dependencies in the representation (how??).

- Maximize MI between teacher and student.

- KD -all dimension are independent (intro 1.2)

-

Three scenario considered [fig 1]

-

KD and representation learning connection (!!)

-

large temperature increases entropy [look into the equation! easy-pesy]

-

interesting proof and section 3.1 [great!]

-

Student takes query - matches with positive keys from teacher and contrast with negative keys from the teacher network.

-

Equation 20 is cross entropy (stupid notation)

-

Key contribution: New loss function: 3.4 eq21

-

-

Saunshi, Nikunj, Orestis Plevrakis, Sanjeev Arora, Mikhail Khodak, and Hrishikesh Khandeparkar. “A theoretical analysis of contrastive unsupervised representation learning.” In International Conference on Machine Learning, pp. 5628-5637. 2019.

- present a framework for analyzing CL (is there any previous?) - introduce latent class!! shows generalization bound. - Unsupervised representation learning - TP: notion of latent classes (downstream tasks are subset of latent classes), rademacher complexity of the architecture! (limitation of negative sampling), extension! - CL gives representation learning with plentiful labeled data! TP asks this question. - Theoretical results to include in the works. - <embed src="https://mxahan.github.io/PDF_files/theory_cl.pdf" width="100%" height="850px"/> -

Anand, Ankesh, Evan Racah, Sherjil Ozair, Yoshua Bengio, Marc-Alexandre Côté, and R. Devon Hjelm. “Unsupervised state representation learning in atari.” arXiv preprint arXiv:1906.08226 (2019).

-

Kolesnikov, Alexander, Xiaohua Zhai, and Lucas Beyer. “Revisiting self-supervised visual representation learning.” In Proceedings of the IEEE conference on Computer Vision and Pattern Recognition, pp. 1920-1929. 2019.

-

Insight about the network used for learning [experimentation]

-

Challenges the choice of different CNNs as network for vision tasks.

-

Experimentation with different architectures [ResNet, RevNet, VGG] and their widths and depths.

-

key findings : hat (1) lessons from architecture design in the fully supervised setting do not necessarily translate to the self-supervised setting; (2) contrary to previously popular architectures like AlexNet, in residual architectures, the final prelogits layer consistently results in the best performance; (3) the widening factor of CNNs has a drastic effect on performance of self-supervised techniques and (4) SGD training of linear logistic regression may require very long time to converge

-

pretext tasks for self-supervised learning should not be considered in isolation, but in conjunction with underlying architectures.

-

-

Hendrycks, Dan, Mantas Mazeika, Saurav Kadavath, and Dawn Song. “Using self-supervised learning can improve model robustness and uncertainty.” arXiv preprint arXiv:1906.12340 (2019).

- TP: self-supervision can benefit robustness in a variety of ways, including robustness to adversarial examples, label corruption, and common input corruptions (how is this new!!)

- Interesting problem and experiment setup for each of the problems

- Besides a collection of techniques allowing models to catch up to full supervision, SSL is used two in conjunction of providing strong regularization that improves robustness and uncertainty estimation

- TP: self-supervision can benefit robustness in a variety of ways, including robustness to adversarial examples, label corruption, and common input corruptions (how is this new!!)

2020

-

Zhan, X., Xie, J., Liu, Z., Ong, Y. S., & Loy, C. C. (2020). Online deep clustering for unsupervised representation learning. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 6688-6697).

- RG: training schedule alternating between feature clustering and network update leads to unstable representations learning.

- propose Online Deep Clustering (ODC) that performs clustering and network update simultaneously rather than alternatingly??

- insight : the cluster centroids should evolve steadily in keeping the classifier stably updated

- design and maintain two dynamic memory modules (i) samples memory to store samples’ labels and features, (ii) centroids memory for centroids evolution

- break down the abrupt global clustering into steady memory update and batchwise label re-assignment. The process is integrated into network update iterations.

-

Wei, C., Wang, H., Shen, W., & Yuille, A. (2020). Co2: Consistent contrast for unsupervised visual representation learning. arXiv preprint arXiv:2010.02217.

- introduces a consistency regularization term into the current contrastive learning framework.

- addressing the problem of treating all as equal negatives (class collision problem)

- Figure 1 (b) summarizes the method.

- Equation 2,3,4 (very easy idea)

- takes the corresponding similarity of a positive crop as a pseudo label, and encourages consistency between these two similarities

- view this instance discrimination task from the perspective of semi-supervised learning.

- introduces a consistency regularization term into the current contrastive learning framework.

-

Cai, T. T., Frankle, J., Schwab, D. J., & Morcos, A. S. (2020). Are all negatives created equal in contrastive instance discrimination?. arXiv preprint arXiv:2010.06682.

- divided negatives by their difficulty for a given query

- studied which difficulty ranges were most important for learning useful representations (how?)

-

a small minority of negatives (hardest 5%—were) both necessary and sufficient for the downstream task to reach full accuracy

-

The hardest 0.1% of negatives are unnecessary and sometimes detrimental

-

Hypothesis: there may be unexploited opportunities to reduce CID computation for any particular query, only a small fraction of the negatives are necessary

- To compute the difficulty for a set of negatives given a particular query, the dot product between the normalized contrastive-space embedding of each negative with the normalized contrastive-space embedding of the query

- Hard negatives are more semantically similar to the query

- Some of the easiest negatives are both anti-correlated (dot prod -1) and semantically similar to the query

- only issue for cosine distance.

- Some negatives are consistently easy or hard across queries.

- Some of the easiest negatives are both anti-correlated (dot prod -1) and semantically similar to the query

- Hard negatives are more semantically similar to the query

- divided negatives by their difficulty for a given query

-

Sohoni, Nimit, Jared Dunnmon, Geoffrey Angus, Albert Gu, and Christopher Ré. “No subclass left behind: Fine-grained robustness in coarse-grained classification problems.” Advances in Neural Information Processing Systems 33 (2020): 19339-19352.

- Hidden stratification: unavailable subclass labels

- TP: GEORGE, a method to both measure and mitigate hidden stratification even when subclass labels are unknown.

- TP: estimate subclass labels for the training data via clustering techniques (Estimation)

- use these approximate subclass labels as a form of noisy supervision in a distributionally robust optimization objective (exploiting)

- TP: GEORGE, a method to both measure and mitigate hidden stratification even when subclass labels are unknown.

-

Paper construction: generative background for data labeling process [figure 2]

-

Reason of hidden stratification: Inherent hardness and Dataset imbalance

-

Method overview [figure 4]: includes two step training (i. training with classification, dimentional reduction of last layer and ii. fine tune.)

- Hidden stratification: unavailable subclass labels

-

Patacchiola, Massimiliano, and Amos Storkey. “Self-supervised relational reasoning for representation learning.” arXiv preprint arXiv:2006.05849 (2020).

- Relation network head instead of direct contrastivie loss [architectural][Pretext task design]

- differentiate from previous one in several ways:

- (i) TP use relational reasoning on unlabeled data (previously on unlabeled data);

- (ii) here TP focus on relations between different views of the same object (intra-reasoning) and between different objects in different scenes (inter-reasoning); [previously withing scene]

- (iii) in previous work training the relation head was the main goal, here is a pretext task for learning useful representations in the underlying backbone

- differentiate from previous one in several ways:

- Related works: pretext task, metric learning, CL, pseudo labeling, InfoMax,

-

Intra-inter reasoning increases mutual information.

- Easy-pesy loss functions to minimize (care about the sharping of the weight of the loss function!)

- Relation network head instead of direct contrastivie loss [architectural][Pretext task design]

-

Huang, Zhenyu, Peng Hu, Joey Tianyi Zhou, Jiancheng Lv, and Xi Peng. “Partially view-aligned clustering.” Advances in Neural Information Processing Systems 33 (2020).

-

Zhu, Benjin, Junqiang Huang, Zeming Li, Xiangyu Zhang, and Jian Sun. “EqCo: Equivalent Rules for Self-supervised Contrastive Learning.” arXiv preprint arXiv:2010.01929 (2020).

- Theoretical paper: Challenges the large number of negative samples [algorithmic]

- Though more negative pairs are usually reported to derive better results, Interpretation: it may be because the hyper-parameters in the loss are not set to the optimum according to different numbers of keys respectively

- Rule to set the hyperparameters

-

SiMo: Alternate for the InfoNCE

- EqCo: Concept of Batch Size (N) and Negative Sample number (K)

- CPC work follow-up

- the learning rate should be adjusted proportional to the number of queries N per batch

- linear scaling rule needs to be applied corresponding to N rather than K

- EqCo: Concept of Batch Size (N) and Negative Sample number (K)

-

SiMo: cancel the memory bank as rely on a few negative samples per batch. Instead, use the momentum encoder to extract both positive and negative key embeddings from the current batch.

- Theoretical paper: Challenges the large number of negative samples [algorithmic]

-

Miech, Antoine, Jean-Baptiste Alayrac, Lucas Smaira, Ivan Laptev, Josef Sivic, and Andrew Zisserman. “End-to-end learning of visual representations from uncurated instructional videos.” In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 9879-9889. 2020.

-

Experiments with Dataset: HowTo100M (task progression) [video with narration]

-

This paper: (multi-instance learning) MIL-NCE (to address misalignment in video narration)!! HOW? Requires instructional videos! (video with text)

-

Video representation learning: shows effectiveness in four downstream tasks: AR (HMDB, UCF, Kinetics), action localization (Youtube8M, crosstask), action segmentation (COIN), Text-to-video retrieval (YouCook2, MSR-VTT).

-

TP: Learn video representation from narration only (instructional video)!

-

Related works: Learning visual representation from unlabeled videos. (ii) Multiple instance learning for video understanding (MIL) [TP: connect MCE with MIL]

-

Two networks in the NCE Calculation : [unstable targets!!]

-

Experiments: Network: 3D CNN

-

-

Xie, Zhenda, Yutong Lin, Zheng Zhang, Yue Cao, Stephen Lin, and Han Hu. “Propagate Yourself: Exploring Pixel-Level Consistency for Unsupervised Visual Representation Learning.” arXiv preprint arXiv:2011.10043 (2020).

- Argue that instance level CL reaches suboptimal representation!

- Can we go better.

- Alternative to instance-level pretext learning - Pixel-level pretext learning!

- Pixel level pretext learning! pixel to propagation consistency!!

- Avail both backbone and head network! to reuse

- complementary to instance level CL

- How to define pixel level pretext tasks!

- Why instance-label is sub-optimal? How? Benchmarking!

- Dense feature learning

- Pixel level pretext learning! pixel to propagation consistency!!

- Application: Object detection (Pascal VOC object detection), semantic segmentation

- Pixel level pretext tasks

- Each pixel is a class!! what!!

- Features from same pixels are same !

- PixContrast: Training data collected in self-supervised manner

- requires pixel feature vector !

- Feature map is warped into the original image space

- Now closer pictures together and …. contrastive setup

- Learns spatially sensitive information

- Each pixel is a class!! what!!

- Pixel level pretext tasks

- Pixel-to-propagation consistency !! (pixpro)

- positive pair obtaining methods

- asymmetric pipeline

- Learns spatial smoothness information

- Pixel propagation module

- pixel to propagation consisency loss

- PPM block (Equation: 3,4): Figure 3

- Argue that instance level CL reaches suboptimal representation!

-

Piergiovanni, A. J., Anelia Angelova, and Michael S. Ryoo. “Evolving losses for unsupervised video representation learning.” In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 133-142. 2020.

- video representation learning! (generic and transfer) (ELo)

- Video object detection

- Zeroshot and fewshot AcRecog

- introduce concept of loss function evolving (automatically find optimal combination of loss functions capturing many (self-supervised) tasks and modalities)

- using an evolutionary search algorithm (!!)

- introduce concept of loss function evolving (automatically find optimal combination of loss functions capturing many (self-supervised) tasks and modalities)

-

Evaluation using distribution matching and Zipf’s law!

-

only outperformed by fully labeled dataset

- This paper: new unsupervised learning of video representations from unlabeled video data.

- multimodal, multitask, unsupervised learning

- Youtube dataset

- combination of single modal tasks and multi-modal tasks

- too much task! how they combined it!! Engineering problem

- evolutionary algorithm to solve these puzzle

- Power law constraints and KL divergence

- Evolutionary search for a loss function that automatically combines self-supervised and distillation task

- unsupervised representation evaluation metric based on power law distribution matching

- multimodal, multitask, unsupervised learning

- Multimodal task, multimodal distillation

- show their efficacy by the power distribution of video classes (zipf’s law)

- Figure 2:

- overwhelming of tasks! what if no commonalities.

- Hypo: Synchronized multi-modal data source should benefit representation learning

- Distillation losses between the multiple streams of networks + self supervised loss

- Methods

- Multimodal learning

- Evolving an unsupervised loss function

- [0-1] constraints

- zipfs distribution matching

- Fitness measurement - k-means clustering

- use smaller subset of data for representation learning

- Cluster the learned representations

- the activity classes of videos follow a Zipf distribution

- HMDB, AVA, Kinetics dataset, UCF101

- ELo methods with baseline weakly-supervised methods

- Self-supervised learning

- reconstruction (encoder-decoder) and prediction tasks (L2 distance minimization)

- Temporal ordering

- Multi-modal contrastive loss {maxmargin loss}

- ELo and ELo+Distillation

- This paper: new unsupervised learning of video representations from unlabeled video data.

-

- video representation learning! (generic and transfer) (ELo)

-

Chen, Ting, Simon Kornblith, Kevin Swersky, Mohammad Norouzi, and Geoffrey Hinton. “Big self-supervised models are strong semi-supervised learners.” arXiv preprint arXiv:2006.10029 (2020).

-

Empirical paper

- unsupervised pretrain (task agnostic), semi-supervised learning (task dependent), fine-tuning

- Impact of Big (wide and deep) network

- More benefited from the unsupervised pretraining

- Big Network: Good for learning all the Representations

- Not necessarily require in one particular task - So we can leave the unnecessary information

- More improve by distillation after fine tuneing

- Impact of Big (wide and deep) network

-

Wow: Maximum use of works: Big network to learn representations, and fine-tune then distill the task (supervised and task specific fine-tune) to a smaller network

- Proposed Methods (3 steps) [this paper]

- Unsupervised pretraining (SimCLR v2)

- Supervised fine-tune using smaller labeled data

- Distilling with unlabeled examples from big network for transferring task-specific knowledge into smaller network

- This paper:

- Investigate unsupervised pretraining and selfsupervised fine-tune

- Finds: network size is important

- Propose to use big unsupervised_pretraining-fine-tuning network to distill the task-specific knowledge to small network.

- Figure 3 says all

- Investigate unsupervised pretraining and selfsupervised fine-tune

- Key contribution

- Big networks works best (in unsupervised pretraining - very few data fine-tune) althought they may overfit!!!

- Big model learns general representations, may not necessary when task-specific requirement

- Importance of multilayer transform head (intuitive because of the transfer properties!! great: since we are going away from metric dimension projection, so more general features)

-

Section 2: Methods (details from loss to implementation)

- Empirical study findings

- Bigger model are label efficient

- Bigger/Deeper Projection Heads Improve Representation Learning

- Distillation using unlabeled data improves semi-supervised learning

- Bigger model are label efficient

-

-

Gordon, Daniel, Kiana Ehsani, Dieter Fox, and Ali Farhadi. “Watching the world go by: Representation learning from unlabeled videos.” arXiv preprint arXiv:2003.07990 (2020).

- Multi-frame multi-pairs positive negative (single imgae)- instance discrimination

-

Tao, Li, Xueting Wang, and Toshihiko Yamasaki. “Self-supervised video representation learning using inter-intra contrastive framework.” In Proceedings of the 28th ACM International Conference on Multimedia, pp. 2193-2201. 2020.

-

Notion of intra-positive (augmentation, optical flow, frame differences, color of same frames)

-

Notion of intra-negative (frame shuffling, repeating)

-

Inter negative (irrelevant video clips - Different time or videos)

-

Two (Multi) Views (same time - positive keys) - and intra-negative

-

Figure 2 (memory bank approach)

-

Consider contrastive from both views.

-

-

Chen, Ting, and Lala Li. “Intriguing Properties of Contrastive Losses.” arXiv preprint arXiv:2011.02803 (2020).

- Generalize the CL loss to broader family of losses

- weighted sum of alignment and distribution loss

- Alignment: align under some transformation

- Distribution: Match a prior distribution

- weighted sum of alignment and distribution loss

- Experiment with weights, temperature, and multihead projection

- Study feature suppression!! (competing features)

- Impacts of final loss!

- Impacts of data augmentation

- Study feature suppression!! (competing features)

-

Suppression feature phenomena: Reduce unimportant features

- Experiments

- two ways to construct data

- Expand beyond the uniform hyperspace prior

- Can’t rely on logSumExp setting in NT-Xent loss

- Requires new optimization (Sliced Wasserstein distance loss)

- Algorithm 1

- Impacts of temperature and loss weights

- Feature suppression

- Target: Remove easy-to-learn but less transferable features for CL (e.g. Color distribution)

- Experiments by creating the dataset

- Digit on imagenet dataset

- RandBit dataset

- Experiments

- Generalize the CL loss to broader family of losses

-

Xiao, Tete, Xiaolong Wang, Alexei A. Efros, and Trevor Darrell. “What should not be contrastive in contrastive learning.” arXiv preprint arXiv:2008.05659 (2020).

- What if downstream tasks violates data augmentation (invariance) assumption!

- Requires prior knowledge of the final tasks

- This paper: Task-independent invariance

- Requires separate embedding spaces! (how much computation increases, redundant!)

- Surely, multihead networks and shared backbones

- new idea: Invariance to all but one augmentation !!

- Surely, multihead networks and shared backbones

- Requires separate embedding spaces! (how much computation increases, redundant!)

-

Pretext tasks: Tries to recover transformation between views

-

Contrastive learning: learn the invariant of the transformations

- Is augmentation helpful: Not always!

- rotation invariance removes the orientation senses! Why not keep both! disentanglement and the multitask!

- This paper: Multi embedding space (transfer the shared backbones and task specific heads)

- Each head is sensitive to all but one transformations

- LooC: (Leave-one-out Contrastive Learning)

- Multi augmentation contrastive learning

- view generation and embedded space

- Figure 2 (crack of jack)

- Good setup to apply the ranking loss function

- Careful with the notation (bad notation)

- instances and their augmentations!

- Is augmentation helpful: Not always!

- What if downstream tasks violates data augmentation (invariance) assumption!

-

Tschannen, Michael, Josip Djolonga, Marvin Ritter, Aravindh Mahendran, Neil Houlsby, Sylvain Gelly, and Mario Lucic. “Self-supervised learning of video-induced visual invariances.” In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 13806-13815. 2020.

- Framework to learn image representations from non-curated videos in the wild by learning frame-, shot-, and video-level invariances

- benefit greatly from exploiting video-induced invariances,

-

Experiment with Youtube8M (3.7 M

- Scale-able to image and video data. (however requires frame label encoding!! what about video AR networks)

- Framework to learn image representations from non-curated videos in the wild by learning frame-, shot-, and video-level invariances

-

Ma, Shuang, Zhaoyang Zeng, Daniel McDuff, and Yale Song. “Learning Audio-Visual Representations with Active Contrastive Coding.” arXiv preprint arXiv:2009.09805 (2020).

-

Tao, Li, Xueting Wang, and Toshihiko Yamasaki. “Self-Supervised Video Representation Using Pretext-Contrastive Learning.” arXiv preprint arXiv:2010.15464 (2020).

-

Tasks and Contrastive setup connection (PCL)

-

hypersphere features spaces

-

Combine Pretext(some tasks, intra information)+Contrastive (similar/dissimilarity, inter-information) losses

-

Assumption: pretext and contrastive learning doing the same representation.

-

Loss function (Eq-6): Contrast (same body +head1)+pretext task (same body + head2)! - Joint optimization

-

Figure 1- tells the story

-

Three pretext tasks (3DRotNet, VCOP, VCP) - Experiment section

-

Both RGB and Frame difference

-

Downstrem tasks (UCF and HMDB51)

-

Some drawbacks at the discussion section.

-

-

-

Li, Junnan, Pan Zhou, Caiming Xiong, Richard Socher, and Steven CH Hoi. “Prototypical contrastive learning of unsupervised representations.” arXiv preprint arXiv:2005.04966 (2020).

- Addresses the issues of instance wise learning (?)

- issue 1: semantic structure missing

- claims to do two things

- Learn low-level features for instance discrimination

- encode semantic structure of the data

- prototypes as latent variables to help find the maximum-likelihood estimation of the network parameters in an Expectation-Maximization framework.

- E-step as finding the distribution of prototypes via clustering

- M-step as optimizing the network via contrastive learning

-

Offers new loss function ProtoNCE (Generalized InfoNCE)

- Show performance for the unsupervised representation learning benchmarking (?) and low-resolution transfer tasks

- Prototype: a representative embedding for a group of semantically similar instances

- prototype finding by standard clustering methods

- Prototype: a representative embedding for a group of semantically similar instances

-

Goal described in figure 1

- EM problem?

- goal is to find the parameters of a Deep Neural Network (DNN) that best describes the data distribution, by iteratively approximating and maximizing the log-likelihood function.

- assumption that the data distribution around each prototype is isotropic Gaussian

-

Related works: MoCo, Deep unsupervised Clustering: not transferable?

- Prototypical Contrastive Learning (PCL)

- See the math notes from section 3

- EM problem?

-

Figure 2 - Overview of methods

- Addresses the issues of instance wise learning (?)

-

Caron, Mathilde, Ishan Misra, Julien Mairal, Priya Goyal, Piotr Bojanowski, and Armand Joulin. “Unsupervised learning of visual features by contrasting cluster assignments.” arXiv preprint arXiv:2006.09882 (2020).

- SwAV (online algorithm) [swapped assignments between multiple vies of same image]

-

Contrastive learning, clustering

-

Predict cluster from different representation, memory efficiency!

- ‘code’ consistency between image and its transformation {target}

- similarity is formulated as a swapped prediction problem between positive pairs

- no negative examples

- the minibatch clustering methods implicitly prevent collapse of the representation space by encouraging samples in a batch to be distributed evenly to different clusters.

-

online code computation

-

Features and codes are learnt online

-

multi-crop: Smaller image with multiple views: Efficient calculation

-

validation: ImageNet linear evaluation protocol

-

Interested related work section

-

Key motivation: Contrastive instance learning

-

Partition constraint (batch wise normalization) to avoid trivial solution

-

Chen, Xinlei, and Kaiming He. “Exploring Simple Siamese Representation Learning.” arXiv preprint arXiv:2011.10566 (2020).

-

Not using Negative samples, large batch or Momentum encoder!!

-

Care about Prevention of collapsing to constant (one way is contrastive learning, another way - Clustering, or online clustering, BYOL)

-

Concepts in figure 1 (SimSiam method)

-

Stop collapsing by introducing the stop gradient operation.

- interesting section in 3

- Loss SimCLR (but differently ) [eq 1 and eq 2]

- Detailed Eq 3 and eq 4

- Empirically shown to avoid the trivial solution

- interesting section in 3

-

-

Morgado, Pedro, Nuno Vasconcelos, and Ishan Misra. “Audio-visual instance discrimination with cross-modal agreement.” arXiv preprint arXiv:2004.12943 (2020).

- learning audio and video representation [audio to video and video to audio!]

- How they showed its better??

- Exploit cross-modal agreement [what setup!] how it make sense!

-

consider in-sync audio video, proposed AVID (au-visu-instan-discrim)

- Experiments with UCF-101, HMDB-51

- Discussed limitation AVID & proposed improvement

- Optimization method - [dimentional reduction by LeCunn and NCE paper]

- Training procedure in section 3.2 AVID

- Cross modal agreement!! it groups (how?) similar videos [both audio and visual]

- Optimization method - [dimentional reduction by LeCunn and NCE paper]

- Discussed limitation AVID & proposed improvement

- Prior arts - binary task of audio-video alignment instance-based

- This paper: matches in the representation embedding domain.

-

AVID calibrated by formulating CMA??

- Figure 2: Variant of avids [summary of the papers]

- This people are first to do it!!

- Joint and Self AVID are bad in result! Cross AVID is the best for generalization in results!

- CMA - Extension of the AVID [used for fine tuning]

- section 4: Loss function extension with cross- AVID and why we need this?

- Figure 2: Variant of avids [summary of the papers]

- learning audio and video representation [audio to video and video to audio!]

-

Robinson, Joshua, Ching-Yao Chuang, Suvrit Sra, and Stefanie Jegelka. “Contrastive Learning with Hard Negative Samples.” arXiv preprint arXiv:2010.04592 (2020).

- Sample good negative (difficult to distinguish) leads better represenation

- challenges: No label! unsupervised method! Control the hardness!

- enables learning with fewest instances and distance maximization.

-

Problem (1): what is true label? sol: Positive unlabeled learning !!!

-

Problem (2): Efficient sampling? sol: efficient importance sampling!!! (consider lack of dissimilarity information)!!!

-

Section 3 most important!

- section 4 interesting

- Sample good negative (difficult to distinguish) leads better represenation

-

Chuang, Ching-Yao, Joshua Robinson, Yen-Chen Lin, Antonio Torralba, and Stefanie Jegelka. “Debiased contrastive learning.” Advances in Neural Information Processing Systems 33 (2020).

-

Sample bias [negatives are actually positive! since randomly sampled]

-

need unbiased - improves vision, NLP and reinforcement tasks.

-

Related to Positive unlabeled learning

-

interesting results

-

-

Zhao, Nanxuan, Zhirong Wu, Rynson WH Lau, and Stephen Lin. “What makes instance discrimination good for transfer learning?.” arXiv preprint arXiv:2006.06606 (2020).

-

Metzger, Sean, Aravind Srinivas, Trevor Darrell, and Kurt Keutzer. “Evaluating Self-Supervised Pretraining Without Using Labels.” arXiv preprint arXiv:2009.07724 (2020).

-

Bhardwaj, Sangnie, Ian Fischer, Johannes Ballé, and Troy Chinen. “An Unsupervised Information-Theoretic Perceptual Quality Metric.” Advances in Neural Information Processing Systems 33 (2020).

-

Qian, Rui, Tianjian Meng, Boqing Gong, Ming-Hsuan Yang, Huisheng Wang, Serge Belongie, and Yin Cui. “Spatiotemporal contrastive video representation learning.” arXiv preprint arXiv:2008.03800 (2020).

-

unlabeled videos! video representation, pretext task! (CVRL)

-

simple idea: same video together and different videos differ in embedding space. (TCN works on same video)

-

Video SimCLR?

-

Inter video clips (positive negatives)

- method

- Simclr setup for video (InfoNCE loss)

- Video encoder (3D resnets)

- Temporal augmentation for same video (good for them but not Ubiquitous)

- Image based spatial augmentation (positive)

- Different video (negative)

- Downstream Tasks

- Action Classification

- Action Detection

- method

-

-

Wang, Tongzhou, and Phillip Isola. “Understanding contrastive representation learning through alignment and uniformity on the hypersphere.” In International Conference on Machine Learning, pp. 9929-9939. PMLR, 2020.

- How to contraint on these and they perform better? weighted loss

- Alignment (how close the positive features) [Epos[f(x)-f(y)]2]

- Uniformly [take all spaces in the hyperplane] [little complex but tangible 4.1.2]

- l_uniform loss definition [!!]

- Interpretation of 4.2 see our future paper !!

- cluster need to form spherical cap

- Theoretical metric for above two constraints??

- Congruous with CL

- gaussing RBF kernel e^{[f(x) -f(y)]^2} helps on uniform distribution achieving.

- Theoretical metric for above two constraints??

- Result figure-7 [interesting]

- Alignment and uniform loss

- How to contraint on these and they perform better? weighted loss

-

Xiong, Yuwen, Mengye Ren, and Raquel Urtasun. “LoCo: Local contrastive representation learning.” arXiv preprint arXiv:2008.01342 (2020).

-

Kalantidis, Yannis, Mert Bulent Sariyildiz, Noe Pion, Philippe Weinzaepfel, and Diane Larlus. “Hard negative mixing for contrastive learning.” arXiv preprint arXiv:2010.01028 (2020).

-

TP (MoCHi): The effect of Hard negatives (how, definition?)

-

TP: feature level mixing for hard negatives (minimal computational overhead, momentum encoder) by synthesizing hard negatives (!!)

-

Related works: Mixup workshop

-

-

Grill, Jean-Bastien, Florian Strub, Florent Altché, Corentin Tallec, Pierre H. Richemond, Elena Buchatskaya, Carl Doersch et al. “Bootstrap your own latent: A new approach to self-supervised learning.” arXiv preprint arXiv:2006.07733 (2020).

-

Unsupervised Representation learning in a discriminative method. (BOYL)

-

Alternative of contrastive learning methods (as CL depends on batch size, image augmentation method, memory bank, resilient). [No negative examples]

-

Online and Target network. [Augmented image output in online network should be close to main image in target network.] What about all zeros! (Empirically slow moving average helps to avoid that)

-

Motivation [section 3 method]

-

similarity constraint between positive keys are also enforced through a prediction problem from an online network to an offline momentum-updated network

- BYOL tries to match the prediction from an online network to a randomly initialised offline network. This iterations lead to better representation than those of the random offline network.

- By continually improving the offline network through the momentum update, the quality of the representation is bootstrapped from just the random initialised network

-

All about architecture! [encoder, projection, predictor and loss function]

-

Works only with batch normalization - else mode collapse

- More criticism

-

-

Qi, Di, Lin Su, Jia Song, Edward Cui, Taroon Bharti, and Arun Sacheti. “Imagebert: Cross-modal pre-training with large-scale weak-supervised image-text data.” arXiv preprint arXiv:2001.07966 (2020).

-

vision pre-training /cross modal pretraining

-

New data collection (LAIT)

- pretraining (see the loss functions)

- Image/text from same context? (ITM)

- Missing pixel detection?

- Masked object Classification (MOC)

- Masked region feature regression (MRFR)

- Masked Language Models

- Fine tune Tasks

- Binary classification losses

- Multi-class classification losses

- Triplet loss

- pretraining (see the loss functions)

-

Multistage pretraining

- Experimented with VQA and others. image language

-

-

Purushwalkam, Senthil, and Abhinav Gupta. “demystifying contrastive self-supervised learning: Invariances, augmentations and dataset biases.” arXiv preprint arXiv:2007.13916 (2020).

- object detection and classification

- quantitative experiment to Demystify CL gains! (reason behind success)

- Observation1: MOCO and PIRL (occlusion invariant)

- but Fails to capture viewpoint

- gain from object-centric dataset - imagenet!

- Propose methods to leverage learn from unstructured video (viewpoint invariant)

- Observation1: MOCO and PIRL (occlusion invariant)

- quantitative experiment to Demystify CL gains! (reason behind success)

-

Utility of systems: How much invarinces the system encodes

-

most contrastive setup - occlusion invariant! what about viewpoint invariant?

- Related works

- Pretext tasks

- Video SSL

- Understanding SSRL

- Mutual information

- This work - Why CL is useful

- study two aspects: (invariances encoding & role of dataset)

- Pretext tasks

- Demystifying Contrastive SSL

- what is good Representation? Utilitarian analysis: how good the downstream task is?

- What about the insights? and qualitative analysis?

- Measuring Invariances

- What invariance do we need? - invariant to all transformation!!

- Viewpoint change, deformation, illumination, occlusion, category instance

- Metrics: Firing representation, global firing rate, local firing rate, target conditioned invariance, representation invariant score.

- Experimental dataset

- occlusion (GOR-10K), viewpoint+instance invariance (Pascal3D+)

- image and video careful augmentation

- What invariance do we need? - invariant to all transformation!!

- what is good Representation? Utilitarian analysis: how good the downstream task is?

-

- object detection and classification

-

Ermolov, Aleksandr, Aliaksandr Siarohin, Enver Sangineto, and Nicu Sebe. “Whitening for self-supervised representation learning.” arXiv preprint arXiv:2007.06346 (2020).

- New loss function (why? and where it works?) W-MSE

- Generalization of the BYOL approach?

- No negative examples (the scatters are preserved)

- Whitening feature is the key: Cholesky decomposition to find Whitening matrix and Backprogation

- Triangular decomposition of covariance matrix and inversing.

-

Whitening operation (scattering effect)

- New loss function (why? and where it works?) W-MSE

-

Ebbers, Janek, Michael Kuhlmann, and Reinhold Haeb-Umbach. “Adversarial Contrastive Predictive Coding for Unsupervised Learning of Disentangled Representations.” arXiv preprint arXiv:2005.12963 (2020).

-

video deep infomax: UCF101 dataset

-

Local and global features:

-

self note: go over this

-

-

Devon, R. “Representation Learning with Video Deep InfoMax.” arXiv preprint arXiv:2007.13278 (2020).

- DIM: prediction tasks between local and global features.

- For video (playing with sampling rate of the views)

- DIM: prediction tasks between local and global features.

-

Liang, Weixin, James Zou, and Zhou Yu. “Alice: Active learning with contrastive natural language explanations.” arXiv preprint arXiv:2009.10259 (2020).

-

Contrastive natural language!!

-

Experiments - (bird classification and Social relationship classifier!!)

- key steps

- run basic Classifier

- fit multivariate gaussian for all class (embedding!!), and find b pair of classes with lowest JS divergence.

- contrastive query to machine understandable form (important and critical part!!). [crop the most informative parts and retrain.]

- neural arch. morphing!! (heuristic and interesting parts) [local, super classifier and attention mechanism!]

- key steps

-

-

Ma, Shuang, Zhaoyang Zeng, Daniel McDuff, and Yale Song. “Learning Audio-Visual Representations with Active Contrastive Coding.” arXiv preprint arXiv:2009.09805 (2020).

-

Park, Taesung, Alexei A. Efros, Richard Zhang, and Jun-Yan Zhu. “Contrastive Learning for Unpaired Image-to-Image Translation.” arXiv preprint arXiv:2007.15651 (2020).

-

Contrastive loss (Same patch of input - output are +ve and rest of the patches are -ve example) [algorithmic]

-

Trains the encoder parts more! (Fig 1, 2) ; Decoders train only on adversarial losses.

-

Contribution in loss (SimCLR) kinda motivation

-

-

Guo, Daniel, Bernardo Avila Pires, Bilal Piot, Jean-bastien Grill, Florent Altché, Rémi Munos, and Mohammad Gheshlaghi Azar. “Bootstrap Latent-Predictive Representations for Multitask Reinforcement Learning.” arXiv preprint arXiv:2004.14646 (2020).

-

Notation Caution. Representation learning [latent space for observe and history]

-

States to future latent observation to future state.

-

Latent embedding of history.

-

Alternative for Deep RL

- Experiments

- DMLab-30

- Compared for PopArt-IMPALA (RNN) with DRAW, Pixel-control, Contrastive predictive control.

- DMLab-30

-

Partially observable environments and Predictive representation.

-

Learn agent state by predictive representation.

-

RNN compresses history from the observations and actions; History as input for new decision making

- Interesting section 3!

-

-

Tian, Yonglong, Chen Sun, Ben Poole, Dilip Krishnan, Cordelia Schmid, and Phillip Isola. “What makes for good views for contrastive learning.” arXiv preprint arXiv:2005.10243 (2020).

-

Multi-view in-variance

-

What is invariant?? (shared information between views)

-

balance to share the information we need in view!!

- Questions

- Knowing task what will be the view??!

- generate views to control the MI

-

Maximize task related shared information, minimize nuisance variables. (InfoMin principle)

-

Contributions (4 - method, representation and task-dependencies, ImageNet experimentation)

- Figure 1: summary.

- Optimal view encoder.

- Sufficient (careful notation overloaded! all info there), minimal sufficient (someinfo dropped), optimal representation (4.3)- only task specific information retrieved

- Optimal view encoder.

- InfoMin Principle: views should have different background noise else min encoder reduces the nuisance variable info. (proposition 4.1 with constraints.)

-

suggestion: Make contrastive learning hard

-

Figure 2: interesting. [experiment - 4.2]

-

Figure 3: U-shape MI curve.

-

section 6: different views and info sharing.

-

-

-

Lu, Jiasen, Vedanuj Goswami, Marcus Rohrbach, Devi Parikh, and Stefan Lee. “12-in-1: Multi-task vision and language representation learning.” In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 10437-10446. 2020.

-

MTL + Dynamic “stop and go” schedule. [multi-modal representation learning]

-

ViLBERT base architecture.

-

-

Misra, Ishan, and Laurens van der Maaten. “Self-supervised learning of pretext-invariant representations.” In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 6707-6717. 2020.

-

Pre-training method [algorithmic]

-

Pretext learning with transformation invariant + data augmentation invariant

- See the loss functions

- Tries to retain small amount of the transformation properties too !!

- Use contrastive learning (See NCE)

- Maximize MI

- Utilizes extra head on the features.

- See the loss functions

-

Motivation from predicting video frames

-

Experiment of jigsaw pretext learning

- Hypothesis: Representation of image and its transformation should be same

- Use different head for image and jigsaw counterpart of that particular image.

- Motivation for learning some extra things by different head network

- Use different head for image and jigsaw counterpart of that particular image.

-

Noise Contrastive learning (contrast with other images)

-

As two head so two component of contrastive loss. (One component to dampen memory update.)

-

Implemented on ResNet

-

PIRL

-

-

-

Srinivas, Aravind, Michael Laskin, and Pieter Abbeel. “Curl: Contrastive unsupervised representations for reinforcement learning.” arXiv preprint arXiv:2004.04136 (2020).

-

Chen, Ting, Simon Kornblith, Mohammad Norouzi, and Geoffrey Hinton. “A simple framework for contrastive learning of visual representations.” arXiv preprint arXiv:2002.05709 (2020).

-

Truely simple! (SimCLR) [algorithmic]

-

Two transfers for each image and representation

-

Same origin image should be more similar than the others.

-

Contrastive (negative) examples are from image other than that.

-

A nonlinear projection head followed by the representation helps.

-

-

Asano, Yuki M., Mandela Patrick, Christian Rupprecht, and Andrea Vedaldi. “Labelling unlabelled videos from scratch with multi-modal self-supervision.” arXiv preprint arXiv:2006.13662 (2020).

-

clustering method that allows pseudo-labelling of a video dataset without any human annotations, by leveraging the natural correspondence between the audio and visual modalities

-

[Multi-Modal representation learning]

-

-

Patrick, Mandela, Yuki M. Asano, Ruth Fong, João F. Henriques, Geoffrey Zweig, and Andrea Vedaldi. “Multi-modal self-supervision from generalized data transformations.” arXiv preprint arXiv:2003.04298 (2020).

- [Multi-Modal representation learning]

-

Khosla, Prannay, Piotr Teterwak, Chen Wang, Aaron Sarna, Yonglong Tian, Phillip Isola, Aaron Maschinot, Ce Liu, and Dilip Krishnan. “Supervised contrastive learning.” arXiv preprint arXiv:2004.11362 (2020).

- [Algorithmic]

-

He, Kaiming, Haoqi Fan, Yuxin Wu, Saining Xie, and Ross Girshick. “Momentum contrast for unsupervised visual representation learning.” In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 9729-9738. 2020.

-

Dynamic dictionary with MA encoder [Algorithmic] Contribution

-

(query) encoder and (key) momentum encoder.

- The update of key encoder in a momentum fashion

- Query updated by back propagation

-

Algorithm [1] is the Core: A combination of end-end & the Memory bank

-

key and query match but the queue would not match!

- Momentum parametric dependencies - Start with the key and query encoder as the Same - key updates slowly, query updates with SGD.

-

-

Huynh, Tri, Simon Kornblith, Matthew R. Walter, Michael Maire, and Maryam Khademi. “Boosting Contrastive Self-Supervised Learning with False Negative Cancellation.” arXiv preprint arXiv:2011.11765 (2020).

- False negative Problem!! (detail analysis) [sampling method for CL]

- Aim: Boosting results

-

Methods to Mitigate false negative impacts (how? what? how much impact! significant means?? what are other methods?)

-

Hypothesis: Randomly taken negative samples (leaked negative)

- Overview

- identify false negative (how?): Finding potential False negative sample [3.2.3]

- Then false negative elimination and false negative attraction

- Contributions

- applicable on top of existing cont. learning

- Overview

- False negative Problem!! (detail analysis) [sampling method for CL]

-

Lee, Jason D., Qi Lei, Nikunj Saunshi, and Jiacheng Zhuo. “Predicting what you already know helps: Provable self-supervised learning.” arXiv preprint arXiv:2008.01064 (2020).

- Highly theoretical paper.

- TP: is to investigate the statistical connections between the random variables of input features and downstream labels

- Two important notion for the tasks: i) Expressivity (does the ssl good enough) ii) Sample complexity (reduce the complexity of sampling)

- TP: is to investigate the statistical connections between the random variables of input features and downstream labels

- TP: analysis on the reconstruction based SSL

-

section 3 describes the paper summary. (connected to simsiam: Section 6

-

Discusses about conditional independence (CI) condition of the samples w.r.t the labels

-

- Highly theoretical paper.

-

Dwibedi, Debidatta, Yusuf Aytar, Jonathan Tompson, Pierre Sermanet, and Andrew Zisserman. “Counting out time: Class agnostic video repetition counting in the wild.” In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 10387-10396. 2020.

- Countix Dataset for video repeatation count. (part of Kinetics dataset)

- annotated with segments of repeated actions and corresponding counts.

-

Per-frame embedding and similarity!! (RepNet)

-

Compared with benchmark: PERTUBE and QUVA

-